Case Study

Building a complete pipeline in GitLab CI-CD for deploying a dockerized Laravel application on AWS EKS

Agenda:

1. Introduction.

2. Create a Gitlab repository.

3. AWS Preparing.

4. Build GitLab CI/CD.

5. Conclusion.

1. Introduction:

CI/CD is a methodology focused on consistently delivering applications to clients by integrating automation into various stages of app development. The fundamental principles of CI/CD include continuous integration, continuous delivery, and continuous deployment.

Integrating security into the CI/CD pipeline is also important. This practice, known as DevSecOps, ensures that security measures are embedded throughout the development, testing, and deployment processes rather than being treated as an afterthought. Integrating security into CI/CD offers several benefits:

Early detection of vulnerabilities: By incorporating security checks and automated testing into the CI/CD pipeline, potential vulnerabilities and issues can be identified and addressed early in the development process. This reduces the likelihood of security breaches and minimizes the costs associated with fixing security issues at a later stage.

Faster remediation: When security is an integral part of the CI/CD pipeline, it enables faster detection and resolution of security issues, improving the overall efficiency of the development and deployment processes.

Improved collaboration: DevSecOps fosters better collaboration among development, operations, and security teams, promoting a shared responsibility for application security. This collaborative approach can help identify and address security concerns more effectively.

Compliance: Integrating security into the CI/CD pipeline facilitates compliance with industry regulations and standards. By automating security checks and monitoring, organizations can better demonstrate their adherence to security requirements and mitigate risks associated with non-compliance.

Continuous improvement: A CI/CD pipeline that incorporates security enables a continuous feedback loop, allowing teams to learn from past security incidents and improve their practices over time.

There are numerous platforms and tools available for constructing a CI/CD pipeline, including AWS, Azure, GCP, GitHub, GitLab, Jenkins, and more. In this particular scenario, GitLab has been selected as the preferred solution for implementing the CI/CD pipeline.

1.1 Overview of the CI/CD Pipeline

The CI/CD pipeline can be summarized through the following steps:

Build a Docker image from the Laravel PHP repository.

Push the Laravel image to a Docker Hub repository.

Retrieve the Laravel image from Docker Hub to execute testing.

Upon successful testing, pull an AWS base image to facilitate communication with the AWS Elastic Container Registry (ECR) and push the Laravel image created in step 1.

Establish a connection with AWS Elastic Kubernetes Service (EKS) to deploy or update the Kubernetes cluster.

If I have extra time, I would be pleased to incorporate security measures into the existing CI/CD pipeline

2. Create a Gitlab repository.

After creating our account in GitLab:

Click on "New Project" Create a blank project → Write a project name and make it private.

2.1 Build a Docker image:

In the repository, there is a Dockerfile as below:

In this line:

Here, i copy all the content of the repository inside the image that depends on php:7.2-apache-stretch image.

In the production environment, we need to ignore some files from copy to the image so you can use the .dockerignore file.

i will find this line for configuring the apache server in the image :

We can check vhost.conf file in the repository :

In our particular scenario, the use of port 80 is primarily for testing and validation purposes, and the implementation of port 443 (HTTPS) has not been deemed necessary at this time. While it is generally recommended to employ HTTPS for enhanced security and data protection, it is understandable that, for specific circumstances such as preliminary testing and internal checks, utilizing port 80 (HTTP) may be acceptable.

3. AWS Preparing :

3.1 Create a new AWS user :

Upon creating an AWS account, the initial user is the root user. However, utilizing the root user for regular tasks is not recommended due to security concerns. Instead, it is advisable to create a new user with the necessary permissions tailored to fulfill the specific requirements of the intended scenario. In AWS (Amazon Web Services), it is recommended to create groups and give permissions to groups rather than individual users for several reasons, which are primarily related to security, manageability, and best practices.

3.1.1 Creating policies for the group :

Per your request, I have created an IAM group named "tempUsers" within AWS, which has been granted read and list permissions exclusively for EC2, EKS, and ECR services. This configuration ensures that users added to this group will have limited access, as specified. By adopting this approach, we are adhering to the recommended best practices for managing permissions within AWS, allowing for enhanced security and streamlined user management.

3.1.2 Access the web console of a new user

user : testuser

pass : Dont#Trust@me19 (temp password)

Use the link to log in, you need to generate a new password.

3.2 Install AWC CLI & Eksctl

we should install AWS CLI to configure our services in AWS, and Eksctl for creating an EKS cluster.

3.2.1 Install AWS CLI

The AWS Command Line Interface (CLI) is a unified tool to manage your AWS services. With just one tool to download and configure, you can control multiple AWS services from the command line and automate them through scripts. To install it, run the following commands:

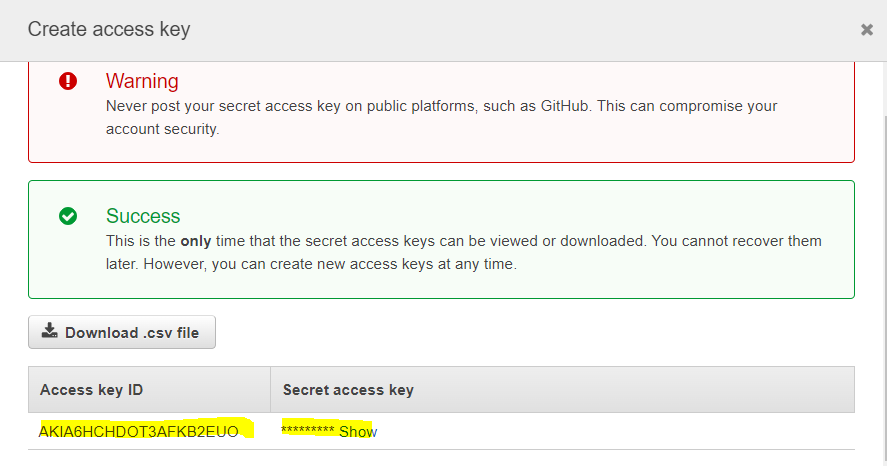

Now print this command < aws configure > to add :

AWS Access Key ID.

AWS Secret Access Key.

Default region name.

3.2.2 install eksctl

Eksctl is a simple CLI tool for creating clusters on EKS — Amazon’s new managed Kubernetes service for EC2. It is written in Go, and uses CloudFormation. You can create a cluster in minutes with just one command. To install it, run the following commands:

3.3 Create AWS ECR

Amazon ECR is a fully managed container registry offering high-performance hosting, so you can reliably deploy application images and artifacts anywhere.

login to AWS console by a new user.

Create ECR.

Create Repository

3.4 Install Kubectl:

The Kubernetes command-line tool, kubectl, allows you to run commands against Kubernetes clusters.

Download the latest release with the command:

2. Install kubectl

sudo install -o root -g root -m 0755 kubectl /usr/local/bin/kubectl

3. Test to ensure the version you installed is up-to-date:

If you do not have root access on the target system, you can still install kubectl to the

~/.local/bindirectory:

chmod +x kubectlmkdir -p ~/.local/binmv ./kubectl ~/.local/bin/kubectl# and then append (or prepend) ~/.local/bin to $PATH

3.5 Create AWS EKS

Now that we have all the prerequisite binaries and libraries installed, let’s go ahead and create an AWS EKS cluster. To create an EKS cluster with eksctl, run the following command:

This command will create a Kubernetes cluster with 2 worker nodes (t2.small), it may take some minutes to fully provision.

you can use kubectl to interact with EKS as follow :

4. Build GitLab CI/CD

Now after preparing all the requirements, let me explain the main section of case study ( GitLab CI/CD pipeline).

you can check the repo for .gitlab-ci.yml file, at this file you can build all steps for building, testing, and deploying your application

In the file, there are variables that will be used by the pipeline like:

AWS_ACCESS_KEY_ID

AWS_DEFAULT_REGION

AWS_ECR_REPOSITORY

AWS_K8_CLUSTER_NAME

AWS_K8_ECR_SECRET_NAME = regcred

AWS_K8_NAMESPACE = laravel

AWS_SECRET_ACCESS_KEY

DOCKER_REGISTRY_PASSWORD

DOCKER_REGISTRY_USER

In GitLab, to prevent a Merge Request (MR) from being completed if any tests fail during the CI/CD process, you can enforce a rule that requires all pipeline jobs to pass before the MR can be merged. This is done by enabling the 'Pipelines must succeed' merge check.

We have 5 stages as you see

4.1 Docker_build_dev stage

In this section of the pipeline, the following steps are executed:

Retrieve a Docker image that includes the necessary services to build the Laravel image.

Assign an AWS Elastic Container Registry (ECR) tag to the Laravel image, as it will be pushed to ECR upon successful testing.

Store the Laravel image in a specified image path, ensuring its availability for subsequent stages.

Push the Laravel image to the container registry to facilitate its use in the upcoming stages of the pipeline.

Note: In a production environment, it is more efficient and practical to utilize a single container registry, such as AWS Elastic Container Registry (ECR). This approach streamlines the management of container images and minimizes the complexity that arises from handling multiple repositories.

4.2 Build stage

In this section of the pipeline:

Construct the Composer dependencies, ensuring that all required packages are installed.

Preserve essential artifacts, such as the 'vendor' directory and the '.env' file, for later use in subsequent stages.

4.3 Test stage

Test the container by running the following command:

4.4 ECR_push_prod stage

In this section:

Pull the AWS base image to make a connection with AWS ECR, you should define AWS_ACCESS_KEY_ID, AWS_DEFAULT_REGION, AWS_SECRET_ACCESS_KEY Variables in settings → CI/CD → Variables.

Load the Laravel image that we save in Stage1.

Delete The Old image in AWS ECR (Please do not do that in the production env)

Push Laravel image to AWS ECR.

4.5 Deploy stage

In this section:

Pull AWS base image to make connections with EKS.

Export TOKEN from AWS ECR

Install Kubectl.

We create a secret to allow pods to pull images from ECR.

5. Deploy YAML files in AWS_K8 dir (Check it in next section)

6. Update deployment by restarting pods

4.6 AWS_K8 files

In this dir, you can check the YAML files for deployments, configmap ,secret, namespace and Load balancer service

4.6.1 laravel_namespace.yaml

4.6.2 deployment.yaml

4.6.3 service.yaml

4.6.4 secret.yaml

4.6.5 configMap.yaml

5. Conclusion

I have built a complete pipeline using GitLab CI/CD for deploying the Laravel app on AWS EKS, therefore, we used ECR to push the Laravel image and eksctl to create the EKS cluster.

Last updated